For years, AI has worked like a talented but isolated specialist — great at solving one task, but with little awareness of the broader business context. You trained a model to answer customer questions, another to crunch churn predictions, maybe even one to write code. But each lived in its own sandbox, often disconnected from the data and tools your team uses every day.

That’s quickly changing. Gartner predicts that by 2027, AI agents will augment or automate 50% of business decisions. We’re entering the age of agentic AI: a new paradigm where AI agents aren’t just intelligent, they’re aware of context, capable of action and deeply enmeshed in your organization’s digital nervous system. These agents don’t just respond — they collaborate, orchestrate and decide, drawing from your databases, tools and internal knowledge in real time.

And behind this revolution? Platforms like AWS that connect intelligence with real-world data and workflows.

From Smart Widgets to Autonomous Collaborators

Traditional AI felt like installing smarter widgets into your stack. Ask a chatbot a question, and it gives you an answer. Run a model on a dataset, and it returns predictions. But the burden of stitching it all together — the orchestration, context-sharing, security and versioning — still fell to humans or brittle pipelines.

Agentic AI flips that script.

Now, instead of isolated tools, you have AI agents that know where your data lives, how your workflows operate, and what your business is trying to achieve. They’re not static endpoints. They’re decision-makers that move across systems, understand policies and take initiative.

But to do so, they need infrastructure that understands both AI and enterprise-grade data systems. That’s where AWS shines. And this evolution isn’t just theoretical. Companies are already experimenting with agentic AI in ways that show how quickly it moves from concept to impact.

Real-World Use Cases

Automated Reporting

Finance teams are already using Bedrock Knowledge Bases and Amazon Redshift to let AI agents generate summaries, answer natural-language questions over data and cut time-to-insight.

Intelligent Support

In healthcare, teams are using Amazon Bedrock to build generative AI agents that produce discharge summaries and compile comprehensive patient profiles — a glimpse into how intelligent support agents can drastically reduce escalations by surfacing the right context at the right time.

Operational Planning

In operations, we’re seeing agentic AI workflows powered by DynamoDB, Amazon Redshift, and AWS Step Functions orchestrate real-time decisions. For example, agents can monitor stock levels, optimize ETL pipelines, and trigger downstream systems like email alerts and ticket creation — all without manual intervention.

These use cases highlight the promise of agentic AI. But what makes them possible isn’t luck or isolated pilots — it’s an underlying design pattern. To understand how these systems actually work, we need to look at agentic architecture.

Understanding Agentic AI Workflows

Agentic AI workflows represent the next evolution of AI systems, moving beyond single prompts toward structured, multi-step processes where agents can reason, act, and adapt over time. Several frameworks have emerged to support these workflows, each with distinct strengths.

- AgentCore (AWS): A secure, enterprise-grade platform designed to deploy and manage AI agents. It runs agents in persistent microVMs (up to 8 hours), offers memory and observability, and integrates seamlessly with the AWS ecosystem (IAM, CloudWatch). AgentCore is framework-agnostic, meaning you can use it with tools like LangGraph or AutoGen while benefiting from AWS-level security and scalability.

- LangGraph (LangChain team): Built for orchestration, LangGraph models agent logic as directed graphs. This provides explicit control, centralized state management, built-in persistence, and strong debugging capabilities through LangSmith. It is ideal for multi-step workflows where auditability and precise control matter.

- AutoGen (Microsoft): Focused on conversational multi-agent systems, AutoGen enables agents with different roles to collaborate through natural language. It supports flexible patterns (two-agent, group, or nested chats), integrates with APIs and tools, and comes with AutoGen Studio for low-code prototyping.

When to Use Each

-

AgentCore: Enterprise deployments needing secure, persistent, and scalable runtime.

-

LangGraph: Complex workflows with structured logic and fine-grained control.

-

AutoGen: Conversational or research-driven scenarios where multiple agents collaborate dynamically.

In short, AgentCore provides the secure backbone, LangGraph defines explicit agent workflows, and AutoGen enables natural conversational collaboration. Choosing the right framework depends on whether your priority is scale, control, or collaboration.

What Is Agentic Architecture?

Think of agentic architecture as the framework that gives AI agents the rules, connections and safeguards they need to move from isolated tools to trusted participants in enterprise workflows.

Several principles define this foundation:

-

Security and trust: The system must enforce identity, permission boundaries and isolation at the architectural level. Agents shouldn’t rely on ad-hoc controls — security is part of the core design.

-

Scalability and reliability: The architecture has to scale horizontally to handle unpredictable spikes in agent workloads, with fault tolerance and fallback paths built into the infrastructure.

-

Identity-aware orchestration: Workflows need a fabric that can consistently carry user or system identities across services, ensuring that every action is executed under the right scope.

-

Transparency and observability: Monitoring, logging and auditing must be first-class features of the architecture, giving teams visibility into agent activity across all layers of the system.

AWS underscores these as foundations for “production-ready” agents — emphasizing that architecture, not just models, determines whether agents can operate safely and at scale. These principles are what separate a true agentic architecture from a patchwork of disconnected AI tools, ensuring the environment is prepared to support agents reliably without forcing every team to reinvent the wheel.

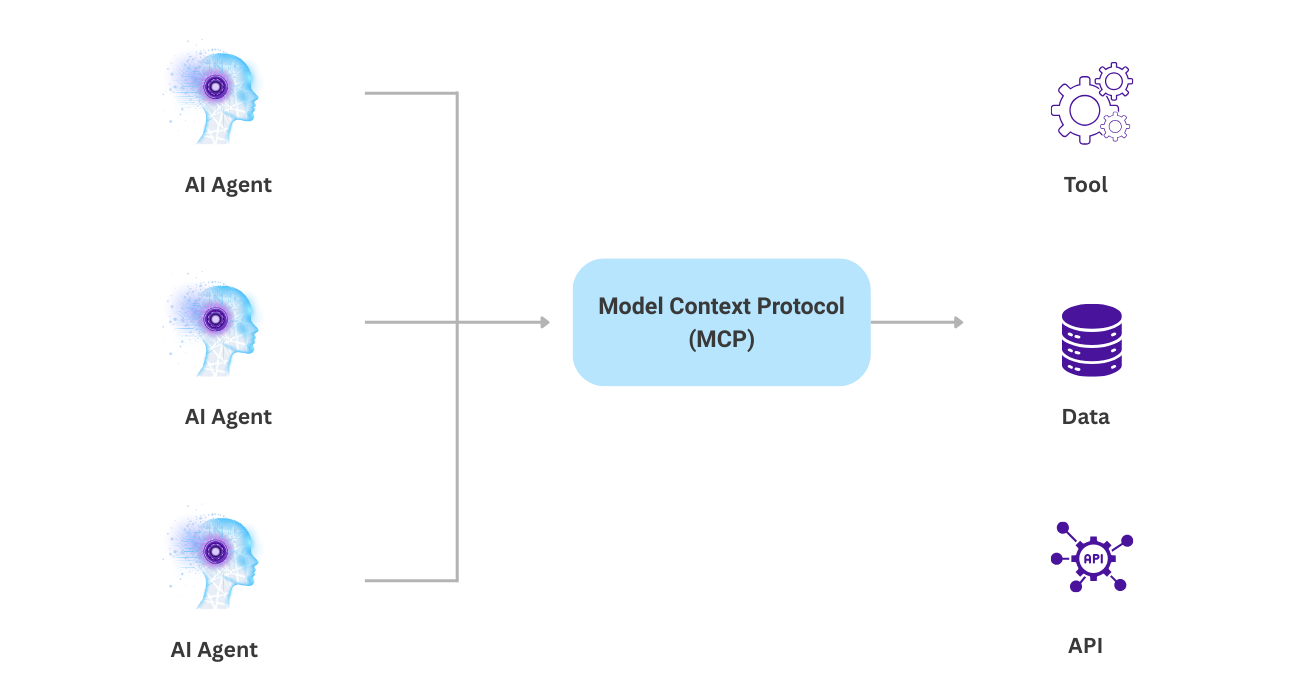

The Model Context Protocol: Solving the N×M Problem

Agentic architectures outline the foundations, but there’s still the messy reality of connecting agents to the dozens of tools and data systems enterprises already rely on. This is where many AI projects stall: the integrations.

In a world of AI agents and ever-growing tools, integration becomes the bottleneck. If each tool (N) needs a custom connection to each AI model or agent (M), you’re quickly buried under an unscalable N×M problem.

The emerging answer is a Model Context Protocol (MCP) — a standard way for agents to access structured context, invoke actions and maintain memory across systems.

Think of MCP like a universal translator for AI. Instead of wiring each model to each app, you expose business capabilities and data as standardized context and actions. Agents can then reason over this shared fabric, no matter where the data or logic lives.

And the need is urgent — 95% of IT leaders say integration is the primary barrier to AI adoption, yet only 28% of enterprise applications are actually connected. MCP addresses that gap.

In AWS, this looks like:

-

Amazon Bedrock: Hosting foundation models with native tooling support.

-

AWS Lambda and Step Functions: Turning business logic into composable, callable actions.

-

API Gateway and EventBridge: Enabling asynchronous, event-driven orchestration.

The result? Agents that can plug into your stack like seasoned operators, not fragile scripts.

Databases in the Agentic Era

Enterprises rely heavily on databases to drive decision-making — but much of that data still goes untapped. Despite gradual progress, most organizations remain far from fully leveraging its value.

Agents can now query, reason over, and even join across:

-

Amazon RDS for structured operational data

-

Aurora for scalable, cloud-native transactions

-

DynamoDB for high-speed NoSQL lookups

-

Redshift for analytical queries at warehouse scale

Crucially, agents don’t just run static SQL. They reason about schema, infer intent and adapt queries based on live metadata.

This opens the door to use cases like:

-

A marketing agent pulling the latest campaign ROI from Redshift

-

A support bot checking open orders in RDS before responding

-

A logistics agent monitoring real-time stock levels from DynamoDB

No more brittle ETL pipelines that exist just to “teach” your AI what your business already knows.

Knowledge Bases: Giving AI a Corporate Memory

Databases give you facts. But what about policies, product nuances or institutional wisdom?

This is where enterprise knowledge bases shine. AWS recently introduced Knowledge Bases for Amazon Bedrock, letting you ingest curated internal content (think Confluence pages, SOPs, product manuals) into a vector store accessible to agents in real time.

The magic isn’t in just storing the info — it’s in how AI retrieves and applies it in context. Paired with retrieval-augmented generation (RAG), agents can now answer questions like:

-

“What’s our return policy in France?”

-

“How do we handle downtime notifications for Tier 1 customers?”

-

“Has this bug been reported before?”

And because it’s all hosted within Bedrock, you’re not juggling third-party tools or worrying about data compliance drift.

Meet Claude: Agentic Thinking in Action

Anthropic’s Claude is a standout among foundation models — not just for its language chops, but for its reasoning and planning abilities, making it especially suited for agentic use cases.

Through Amazon Bedrock, you can integrate Claude into your stack with native access controls and observability. But where it really shines is when you pair it with databases and knowledge bases.

Imagine Claude receiving a support query. Instead of replying blindly, it:

-

Checks the customer’s contract tier in RDS

-

Looks up past issue history in DynamoDB

-

Pulls troubleshooting steps from your internal wiki

-

Escalates via an internal ticketing API if needed

That’s not just an assistant — it’s an autonomous agent driving outcomes.

Tying It All Together: The AWS Agentic Stack

Here’s a typical pattern we see emerging among forward-thinking teams:

-

Amazon Bedrock to host and manage foundation models like Claude

-

Knowledge Bases for Bedrock for curated enterprise content

-

Lambda + Step Functions to expose internal tools and workflows as callable actions

-

Redshift, Aurora, DynamoDB to provide real-time data context

-

IAM, CloudTrail, GuardDuty for airtight security and traceability

By wiring these together, you get an architecture where AI doesn’t sit on the sidelines — it lives at the heart of your operations.

Security & Governance: No Agent Left Unchecked

AI agents are powerful — but with great power comes the need for guardrails. And the cost of mistakes is real. IBM reports that the average global cost of a data breach hit $4.88 million in 2024, up from $4.45 million the year prior. Governance just isn’t optional.

AWS provides the toolkit to enforce responsible usage:

-

IAM roles and scopes ensure agents only access what they’re authorized to.

-

Amazon Bedrock Guardrails help block prompt injection and enforce response policies, while system prompts and input validation add extra protection.

-

CloudTrail and Bedrock logging give teams visibility into who (or what) did what, and when.

-

GuardDuty and Security Hub strengthen threat detection and compliance monitoring across agent activity.

As agents grow more autonomous, controls like these will become non-negotiable.

The Road Ahead: Our Cruz Street POV

We believe agentic AI is not a trend — it’s a tectonic shift in how businesses interact with data. Within 3–5 years, enterprise teams won’t ask, “Should we connect our AI to our data?” but, “Why isn’t our AI already handling this?”

Database and knowledge base integration will be table stakes. The differentiator will be how well your agents reason, adapt and act across your systems. If you’re building on AWS, you’re already halfway there.

Let us know if you’d like Cruz Street’s help mapping the AI future to your infrastructure. Because in the agentic era, the only real limit is what your AI can access.